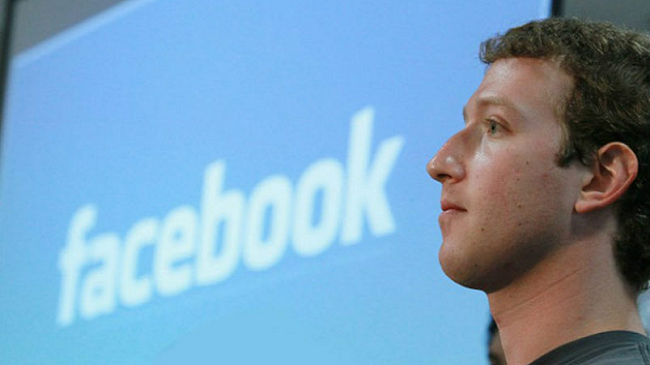

Have you seen some “tips to spot fake news” on your Facebook newsfeed recently?

Over the past year, the social media company hasbeenscrutinized for influencing the US presidential election by spreading fake news (propaganda). Obviously, the ability to spread completely made-up stories about politicians trafficking child sex slaves and imaginary terrorist attacks with impunity is bad for democracy and society.

Something had to be done.

Enter Facebook’s new, depressingly incompetent strategy for tackling fake news. The strategy has three, frustratingly ill-considered parts.

New products

The first part of the plan is to build new products to curb the spread of fake news stories. Facebook says it’s trying “to make it easier to report a false news story” and find signs of fake news such as “if reading an article makes people significantly less likely to share it.”

It will then send the story to independent fact checkers. If fake, the story “will get flagged as disputed and there will be a link to a corresponding article explaining why.”

This sounds pretty good, but it won’t work.

If non-experts could tell the difference between real news and fake news (which is doubtful), there would be no fake news problem to begin with.

What’s more, Facebook says: “We cannot become arbiters of truth ourselves — it’s not feasible given our scale, and it’s not our role.” Nonsense.

Facebook is like a megaphone. Normally, if someone says something horrible into the megaphone, it’s not the megaphone company’s fault. But Facebook is a very special kind of megaphone that listens first and then changes the volume.

Facebook is like a megaphone. Enrique Castro-Mendivil/Reuters

Facebook is like a megaphone. Enrique Castro-Mendivil/Reuters

The company’s algorithms largely determine both the content and order of your newsfeed. So if Facebook’s algorithms spread some neo-Nazi hate speech far and wide, yes, it is the company’s fault.

Worse yet, even if Facebook accurately labels fake news as contested, it will still affect public discourse through “availability cascades.”

Each time you see the same message repeated from (apparently) different sources, the message seems more believable and reasonable. Bold lies are extremely powerful because repeatedly fact-checking them can actually make people remember them as true.

These effects are exceptionally robust; they cannot be fixed with weak interventions such as public service announcements, which brings us to the second part of Facebook’s strategy: helping people make more informed decisions when they encounter false news.

Helping you help yourself

Facebook is releasing public service announcements and funding the “news integrity initiative” to help “people make informed judgments about the news they read and share online”.

This – also – doesn’t work.

A vast body of research in cognitive psychology concerns correcting systematic errors in reasoning such as failing to perceive propaganda and bias. We have known since the 1980s that simply warning people about their biased perceptions doesn’t work.

Similarly, funding a “news integrity” project sounds great until you realise the company is really talking about critical thinking skills.

Improving critical thinking skills is a key aim of primary, secondary and tertiary education.

If four years of university barely improves these skills in students, what will this initiative do? Make some Youtube videos? A fake news FAQ?

Facebook has a depressingly incompetent strategy for tackling fake news. (Shailesh Andrade/Reuters)

Facebook has a depressingly incompetent strategy for tackling fake news. (Shailesh Andrade/Reuters)

Funding a few research projects and “meetings with industry experts” doesn’t stand a chance to change anything.

Disrupting economic incentives

The third prong of this non-strategy is cracking down on spammers and fake accounts, and making it harder for them to buy advertisements.

While this is a good idea, it’s based on the false premise that most fake news comes from shady con artists rather thanmajornewsoutlets.

You see, “fake news” is Orwellian newspeak — carefully crafted to mean a totally fabricated story from a fringe outlet masquerading as news for financial or political gain. But these stories are the most suspicious and therefore the least worrisome. Bias and lies from public figures, official reports and mainstream news are far more insidious.

And what about astrology, homeopathy, psychics, anti-vaccination messages, climate change denial, intelligent design, miracles, and all the rest of the irrational nonsense bandied about online?

What about the vast array of deceptive marketing and stealth advertising that is core to Facebook’s business model?

As of this writing, Facebook doesn’t even have an option to report misleading advertisements.

What is Facebook to do?

Facebook’s strategy is vacuous, evanescent, lip service; a public relations exercise that makes no substantive attempt to address a serious problem.

But the problem is not unassailable.

The key to reducing inaccurate perceptions is to redesign technologies to encourage more accurate perception. Facebook can do this by developing a propaganda filter — something like a spam filter for lies.

Facebook may object to becoming an “arbiter of truth”. But coming from a company that censors historic photos and comedians calling for social justice, this sounds disingenuous.

Facebook created controversy in September 2016 after censoring a historic image from the Vietnam war.NTB Scanpix/Cornelius Poppe/via Reuters

Facebook created controversy in September 2016 after censoring a historic image from the Vietnam war.NTB Scanpix/Cornelius Poppe/via Reuters

Nonetheless, Facebook has a point. To avoid accusations of bias, it should not create the propaganda filter itself. It should simply fund researchers in artificial intelligence, software engineering, journalism and design to develop an open-source propaganda filter that anyone can use.

Why should Facebook pay? Because it profits from spreading propaganda, that’s why.

Sure, people will try to game the filter, but it will still work. Spam is frequently riddled with typos, grammatical errors and circumlocution not only because it’s often written by non-native English speakers but also because the weird writing is necessary to bypass spam filters.

If the propaganda filter has a similar effect, weird writing will make the fake news that slips through more obvious. Better yet, an effective propaganda filter would actively encourage journalistic best practices such as citing primary sources.

Developing a such a tool won’t be easy. It could take years and several million dollars to refine. But Facebook made over US$8 billion last quarter, so Mark Zuckerberg can surely afford it.

About the author: Paul Ralph, is a Senior Lecturer in Computer Science at the University of Auckland

This article was republished from theconversation.com.